Processing statistics files

In this example we show how one can use pysemtools to process statistics

Import general modules

[1]:

# Import required modules

from mpi4py import MPI #equivalent to the use of MPI_init() in C

import matplotlib.pyplot as plt

import numpy as np

# Get mpi info

comm = MPI.COMM_WORLD

# Hide the log for the notebook. Not recommended when running in clusters as it is better you see what happens

import os

os.environ["PYSEMTOOLS_HIDE_LOG"] = 'true'

Index the files

The first step we will do is to index the files that we will use for this example. By indexing we mean that we will gather a json file that will contain all the information of the files we want to process. We do this to ease the process of averaging, since we can easily inspect what are the attributes of the files.

In our case we will index statistics files produced from neko, but one could also index other type of files. This only works for nek5000 type of files.

you can check the documentation for this. We note that at this stage, it is important to include the time interval in the indexing.

[2]:

from pysemtools.postprocessing.file_indexing import index_files_from_folder

index_files_from_folder(comm, folder_path="../data/sem_data/statistics/cylinder_rbc_nelv_600/", run_start_time=200, stat_start_time=200, output_folder="./", file_type=["mean_field.fld", "stats.fld"], include_file_contents=True, include_time_interval=True)

=========================================================================================

Now we have written a file index with the two types of files in the input folder. If you inspect the file, you will see that there is a lot of information regarding the files. We will use this info to average them in time.

Average in time

For this, we simply give as inputs the files that we just wrote.

In this case, we are choosing the output batch lenght to be a very large number, such that we do not get average batches, but instead we average all the files. The mesh index indicates which is the json index of the files that contain the mesh.

You can check more about the arguments of the functions if you inspect them.

[3]:

from pysemtools.postprocessing.statistics.time_averaging import average_field_files

# Average the mean fields

average_field_files(comm, field_index_name="mean_field_index.json", output_folder="./", output_name="low_order_stats", output_batch_t_len=50000, mesh_index="0", dtype=np.single, rel_tol=0.05, output_word_size=4, write_mesh=True)

# Average the stats fields

average_field_files(comm, field_index_name="stats_index.json", output_folder="./", output_name="high_order_stats", output_batch_t_len=50000, mesh_index="0", dtype=np.single, rel_tol=0.05, output_word_size=4, write_mesh=True)

=================================================================================================

=================================================================================================

=================================================================================================

=================================================================================================

done! now you have obtained some fld files that contain 3D time averaged data in an spectral mesh. With this, you can easily visualize it in paraview or visit.

Interpolate

The data for this example corresponds to a simulation on a cylinder. Because of this we would like to interpolate form the spectral element mesh into a cylindrical mesh.

You can see example 4.5 to see how this mesh is created. In here, we use the results from that script to interpolate the data.

1. index the new outputs

We will initially index the new outputs just to have a convenient way to know the path of our data.

[4]:

index_files_from_folder(comm, folder_path="./", output_folder="./", file_type=["low_order_stats.fld", "high_order_stats.fld"], include_file_contents=False, include_time_interval=False)

=========================================================================================

2. Use wrapper function to interpolate

now that we have indexed, we can use this to interpolate. We will use a wrapper function to interpolate the contents of the files. You can check example 4.6 for more details.

For this, we first need to prepare a “settings” file

[5]:

from pysemtools.interpolation.wrappers import interpolate_fields_from_disk

# Set the inputs for the first set of files

query_points_fname = "../4-interpolation/points.hdf5"

sem_mesh_fname = "./low_order_stats0.f00000"

interpolated_fields_output_fname = "./low_order_stats_interpolated.hdf5"

field_interpolation_dictionary = {}

field_interpolation_dictionary['input_type'] = "file_index"

field_interpolation_dictionary['file_index'] = f"low_order_stats_index.json"

field_interpolation_dictionary['fields_to_interpolate'] = ["all"]

interpolate_fields_from_disk(comm, query_points_fname, sem_mesh_fname, field_interpolation_dictionary, interpolated_fields_output_fname=interpolated_fields_output_fname)

# Set the inputs for the second set of files

query_points_fname = "../4-interpolation/points.hdf5"

sem_mesh_fname = "./high_order_stats0.f00000"

interpolated_fields_output_fname = "./high_order_stats_interpolated.hdf5"

field_interpolation_dictionary = {}

field_interpolation_dictionary['input_type'] = "file_index"

field_interpolation_dictionary['file_index'] = f"high_order_stats_index.json"

field_interpolation_dictionary['fields_to_interpolate'] = ["all"]

interpolate_fields_from_disk(comm, query_points_fname, sem_mesh_fname, field_interpolation_dictionary, interpolated_fields_output_fname=interpolated_fields_output_fname)

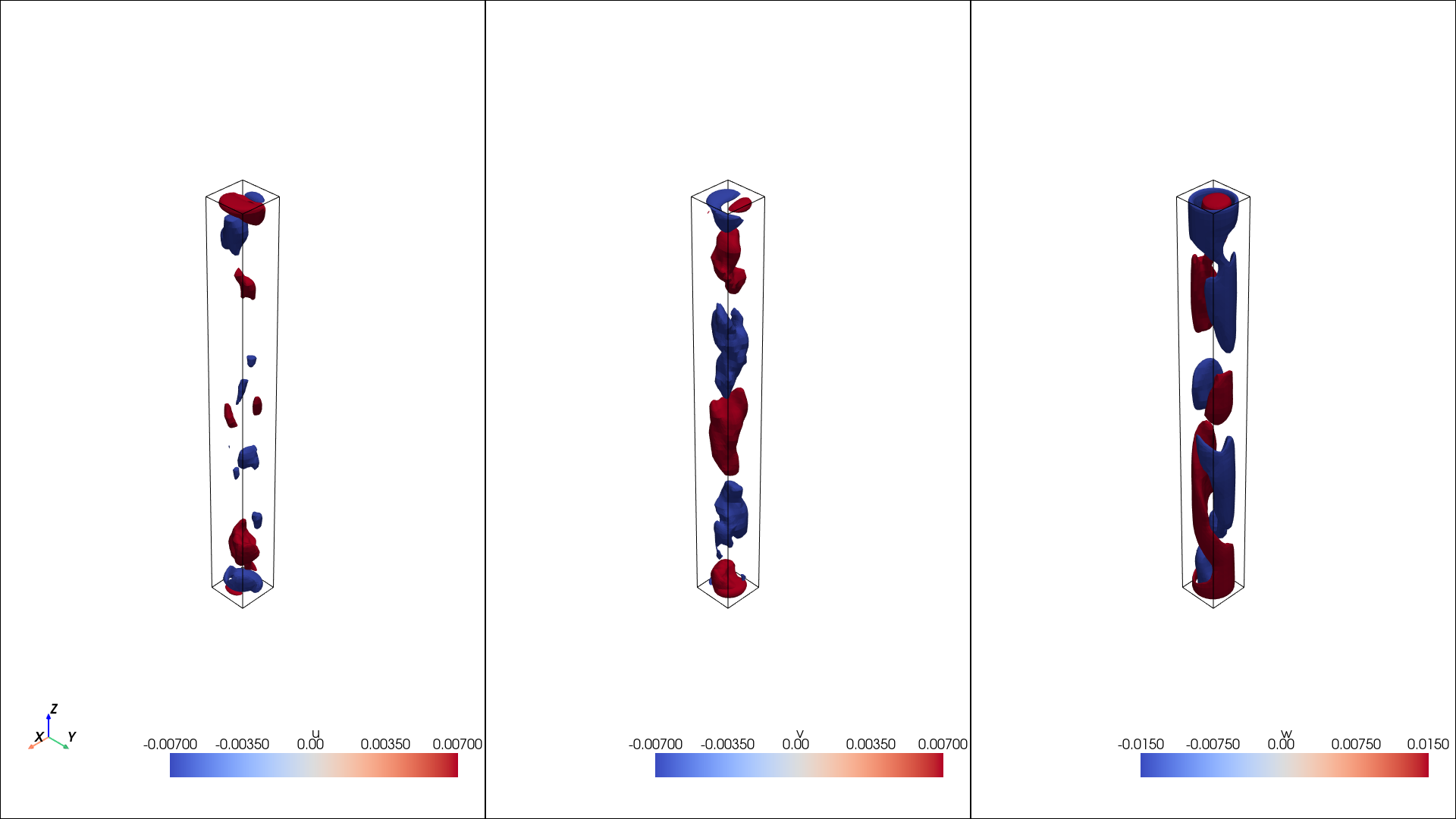

Visualize the data in the cylindrical mesh

We can now load the data and check it out.

[6]:

import h5py

import pyvista as pv

from IPython.display import Image, display

# Load the mesh

with h5py.File(query_points_fname, 'r') as f:

x = f["x"][:]

y = f["y"][:]

z = f["z"][:]

# Load the interpolated data

with h5py.File("./low_order_stats_interpolated00001.hdf5", 'r') as f:

u = f["u"][:]

v = f["v"][:]

w = f["w"][:]

# Create a structured mesh

mesh_1 = pv.StructuredGrid(x, y, z)

mesh_1.point_data["u"] = u.ravel(order='F')

mesh_1.point_data["v"] = v.ravel(order='F')

mesh_1.point_data["w"] = w.ravel(order='F')

# Plot

pl = pv.Plotter(shape=(1, 3), window_size=[1920,1080]) # Size in pixels

pl.add_axes()

# plot first row

pl.subplot(0, 0)

isos = mesh_1.contour(scalars = "u", isosurfaces = [-0.007, 0.007])

pl.add_mesh(mesh_1.outline(), color="k")

pl.add_mesh(isos, opacity=1, cmap="coolwarm")

pl.subplot(0, 1)

isos = mesh_1.contour(scalars = "v", isosurfaces = [-0.007, 0.007])

pl.add_mesh(mesh_1.outline(), color="k")

pl.add_mesh(isos, opacity=1, cmap="coolwarm")

pl.subplot(0, 2)

isos = mesh_1.contour(scalars = "w", isosurfaces = [-0.015, 0.015])

pl.add_mesh(mesh_1.outline(), color="k")

pl.add_mesh(isos, opacity=1, cmap="coolwarm")

#pl.show()

# Capture the plot as an image and show it

image_path = "static_plot.png"

pl.screenshot(image_path)

pl.close()

display(Image(filename=image_path))

field keys

The interpolated fields and also the fields read from nek5000 have a set of given keys. One could know what each of the keys represent by heart, but we have also provided some “indices” to help identify what is what.

It can be used as folows:

[7]:

from pysemtools.postprocessing.statistics.file_field_index import file_field_index

# Specify the source of the data

key_index = file_field_index(source_code="Neko_old") # Neko old alludes to statistics written between 2 files

print(key_index["U"]["pynek_field_key"])

u

see that printing the field_key of U gives

Rotate the data to cylindrical coordinates

In this instance, it is beneficial to rotate the data given the shape of our domain

Some of the terms that we have in our files are tensors and require special treatment. However we will rotate only some of the quantities.

Here we will go through the tensors and apply rotations depending on what is needed since the rotation must be applied differently depending on the rank of the tensor.

The user must provide a rotation matrix fuction that generates a rotation matrix given the coordinates. For this case, we can use one included in pysemtools, since this is simply a cylinder.

The tensor_components list should contain the keys to be used to identify the tensor components in the file.

[8]:

from pysemtools.postprocessing.rotations import cartesian_to_cylindrical_rotation_matrix as rotation_matrix

from pysemtools.postprocessing.rotations import rotate_tensor

# Specify the components of the tensor. This should match the key in the file

tensor_components = [key_index["U"]["pynek_field_key"], key_index["V"]["pynek_field_key"], key_index["W"]["pynek_field_key"]]

rotate_tensor(comm, query_points_fname, "./low_order_stats_interpolated00001.hdf5", tensor_components, rotation_matrix, output_file="./rotated_mean_fields.hdf5", output_field_names=["U_r", "U_th", "U_z"])

# Specify the components of the tensor. This should match the key in the file

tensor_components = [key_index["UU"]["pynek_field_key"], key_index["UV"]["pynek_field_key"], key_index["UW"]["pynek_field_key"], key_index["UV"]["pynek_field_key"], key_index["VV"]["pynek_field_key"], key_index["VW"]["pynek_field_key"], key_index["UW"]["pynek_field_key"], key_index["VW"]["pynek_field_key"], key_index["WW"]["pynek_field_key"]]

rotate_tensor(comm, query_points_fname, "./high_order_stats_interpolated00001.hdf5", tensor_components, rotation_matrix, output_file="./rotated_reynolds_stress.hdf5", output_field_names=["U_rU_r", "U_rU_th", "U_rU_z", "U_thU_r", "U_thU_th", "U_thU_z", "U_zU_r", "U_zU_th", "U_zU_z"])

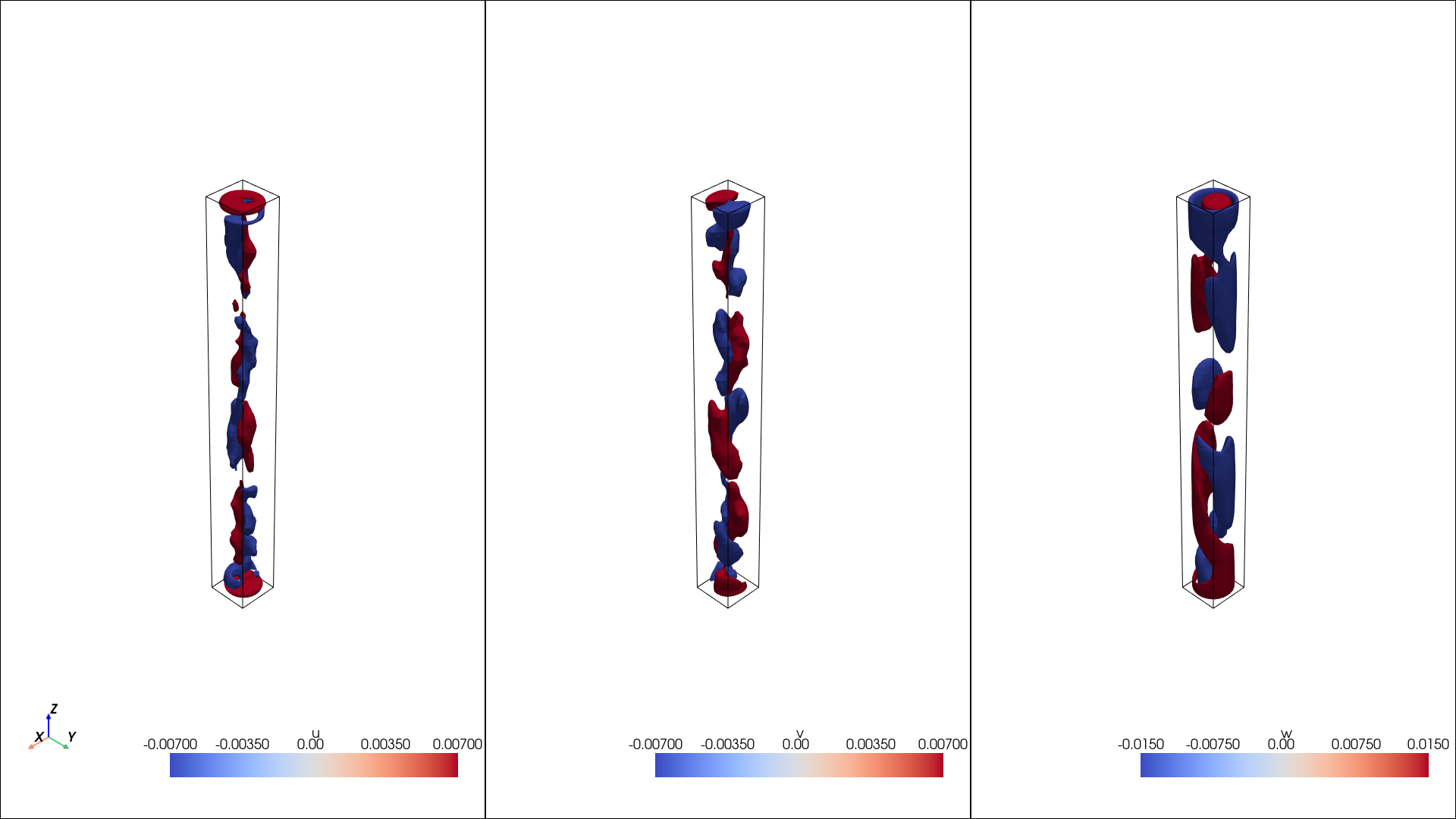

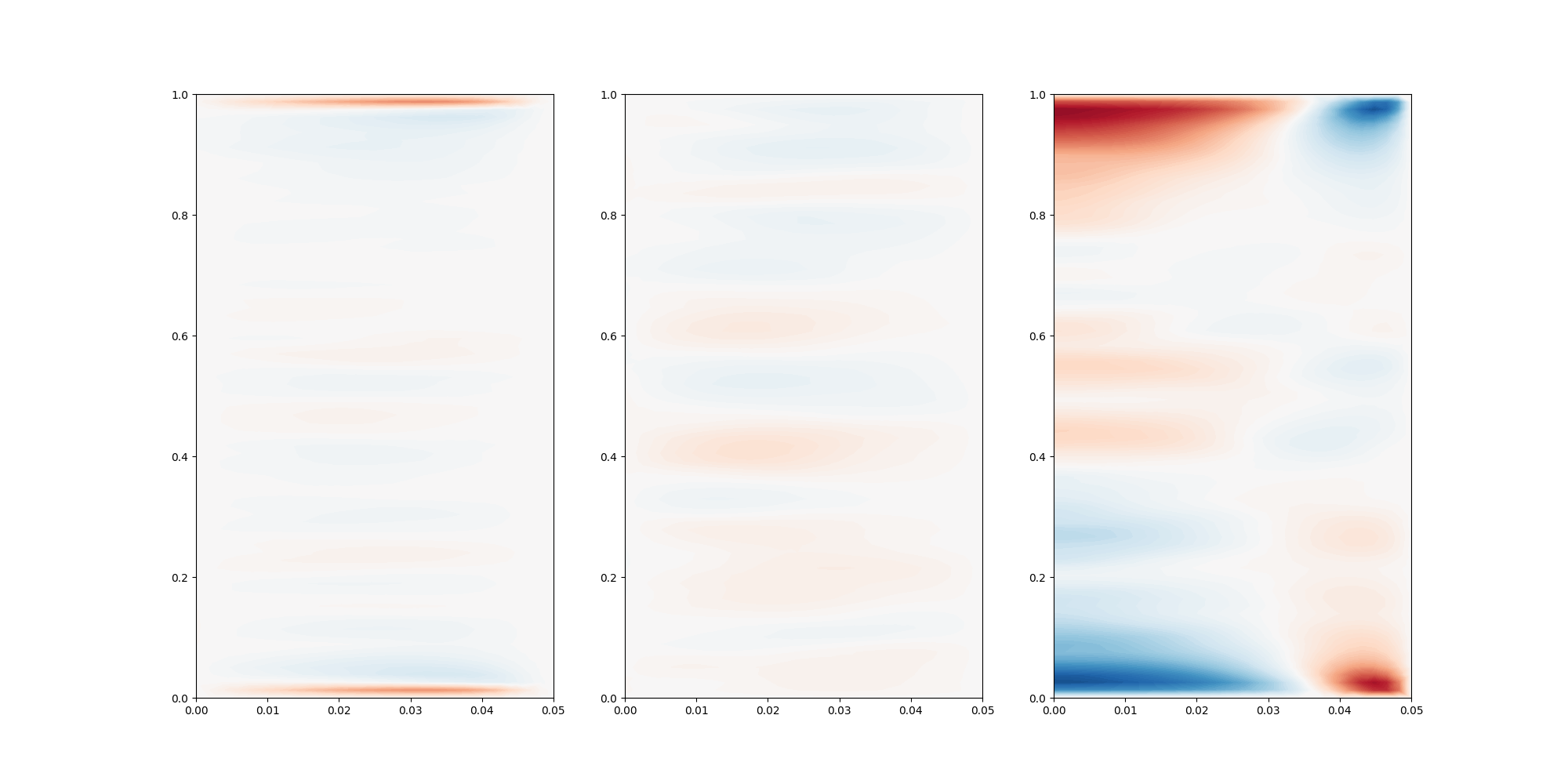

Visualize the rotated mean

Now check out how the rotated quantities look

[9]:

# Load the mesh

with h5py.File(query_points_fname, 'r') as f:

x = f["x"][:]

y = f["y"][:]

z = f["z"][:]

# Load the interpolated data

with h5py.File("./rotated_mean_fields.hdf5", 'r') as f:

u = f["U_r"][:]

v = f["U_th"][:]

w = f["U_z"][:]

# Create a structured mesh

mesh_1 = pv.StructuredGrid(x, y, z)

mesh_1.point_data["u"] = u.ravel(order='F')

mesh_1.point_data["v"] = v.ravel(order='F')

mesh_1.point_data["w"] = w.ravel(order='F')

# Plot

pl = pv.Plotter(shape=(1, 3), window_size=[1920,1080]) # Size in pixels

pl.add_axes()

# plot first row

pl.subplot(0, 0)

isos = mesh_1.contour(scalars = "u", isosurfaces = [-0.007, 0.007])

pl.add_mesh(mesh_1.outline(), color="k")

pl.add_mesh(isos, opacity=1, cmap="coolwarm")

pl.subplot(0, 1)

isos = mesh_1.contour(scalars = "v", isosurfaces = [-0.007, 0.007])

pl.add_mesh(mesh_1.outline(), color="k")

pl.add_mesh(isos, opacity=1, cmap="coolwarm")

pl.subplot(0, 2)

isos = mesh_1.contour(scalars = "w", isosurfaces = [-0.015, 0.015])

pl.add_mesh(mesh_1.outline(), color="k")

pl.add_mesh(isos, opacity=1, cmap="coolwarm")

#pl.show()

# Capture the plot as an image and show it

image_path = "static_plot.png"

pl.screenshot(image_path)

pl.close()

display(Image(filename=image_path))

In this case, we can average in the azimuthal direction.

[10]:

# Load mass matrix

with h5py.File(query_points_fname, 'r') as f:

bm = f["mass"][:]

bm[np.where(bm == 0)] = 1e-12

# Load the mesh

with h5py.File(query_points_fname, 'r') as f:

r = f["r"][:]

th = f["th"][:]

z = f["z"][:]

# Load the interpolated data

with h5py.File("./rotated_mean_fields.hdf5", 'r') as f:

u = f["U_r"][:]

v = f["U_th"][:]

w = f["U_z"][:]

#Average in the azimuthal direction direction

u_rz = np.sum(u*bm, axis=1)/ np.sum(bm, axis=1)

v_rz = np.sum(v*bm, axis=1)/ np.sum(bm, axis=1)

w_rz = np.sum(w*bm, axis=1)/ np.sum(bm, axis=1)

levels = np.linspace(-0.05, 0.05, 100)

fig, ax = plt.subplots(1,3, figsize=(20,10))

ax[0].tricontourf(r[:,0,:].flatten(),z[:,0,:].flatten(), u_rz.flatten(), levels=levels, cmap="RdBu_r")

ax[1].tricontourf(r[:,0,:].flatten(),z[:,0,:].flatten(), v_rz.flatten(), levels=levels, cmap="RdBu_r")

ax[2].tricontourf(r[:,0,:].flatten(),z[:,0,:].flatten(), w_rz.flatten(), levels=levels, cmap="RdBu_r")

#plt.show()

fig.savefig("contours.png")

plt.close()

display(Image(filename="contours.png"))

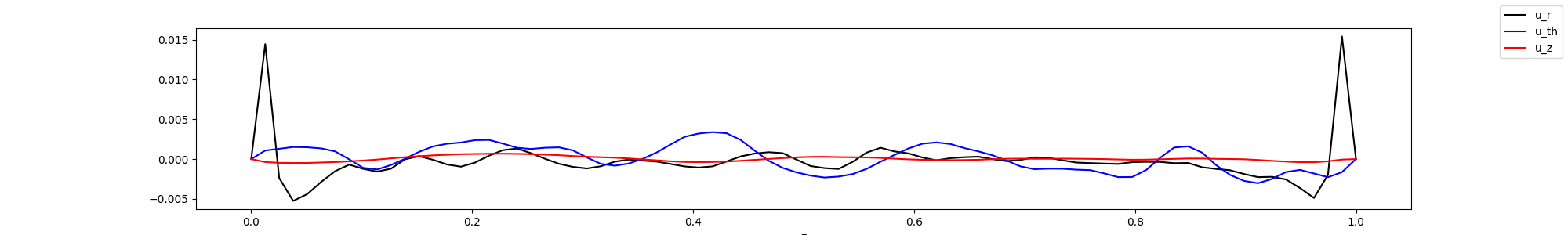

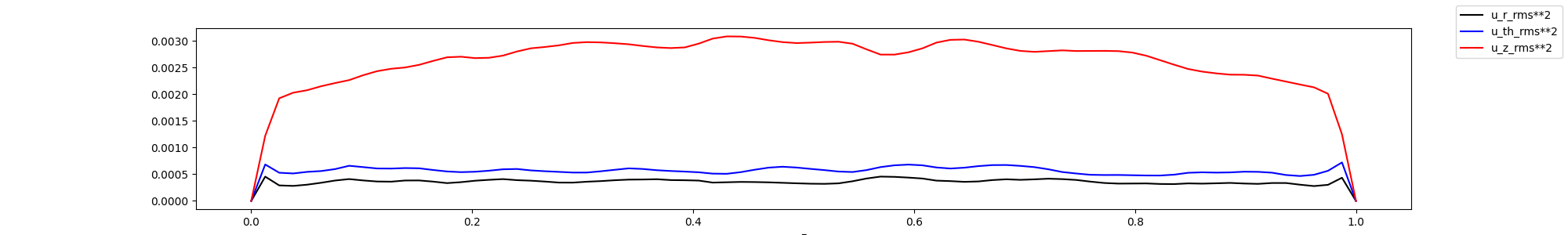

Plot the rotated values in 1d, by averaging in azimuthal and radial directions. In this case, this might not be so telling, as the flow is not homogenous in the radial direction, but it have give an indication on how the flow behaves.

In this case, we might have had too few points close to the wall, as we do not observe any peak in the z velocity component. Note that it would appear better if we take the square root.

[11]:

# Load mass matrix

with h5py.File(query_points_fname, 'r') as f:

bm = f["mass"][:]

bm[np.where(bm == 0)] = 1e-12

# Load the interpolated data

with h5py.File("./rotated_mean_fields.hdf5", 'r') as f:

u = f["U_r"][:]

v = f["U_th"][:]

w = f["U_z"][:]

with h5py.File("./rotated_reynolds_stress.hdf5", 'r') as f:

uu = f["U_rU_r"][:]

vv = f["U_thU_th"][:]

ww = f["U_zU_z"][:]

# Average in the azimuthal direction and radial direction

u_z = np.sum(u*bm, axis=(0,1))/ np.sum(bm, axis=(0,1))

v_z = np.sum(v*bm, axis=(0,1))/ np.sum(bm, axis=(0,1))

w_z = np.sum(w*bm, axis=(0,1))/ np.sum(bm, axis=(0,1))

uu_z = np.sum(uu*bm, axis=(0,1))/ np.sum(bm, axis=(0,1))

vv_z = np.sum(vv*bm, axis=(0,1))/ np.sum(bm, axis=(0,1))

ww_z = np.sum(ww*bm, axis=(0,1))/ np.sum(bm, axis=(0,1))

fig, ax = plt.subplots(1,1, figsize=(20,3))

ax.plot(z[0,0,:], u_z, '-k', label="u_r")

ax.plot(z[0,0,:], v_z, '-b', label="u_th")

ax.plot(z[0,0,:], w_z, '-r', label="u_z")

ax.set_xlabel("z")

fig.legend()

#plt.show()

fig.savefig("mean_fields.png")

plt.close()

display(Image(filename="mean_fields.png"))

fig, ax = plt.subplots(1,1, figsize=(20,3))

ax.plot(z[0,0,:], uu_z - u_z**2, '-k', label="u_r_rms**2")

ax.plot(z[0,0,:], vv_z - v_z**2, '-b', label="u_th_rms**2")

ax.plot(z[0,0,:], ww_z - w_z**2, '-r', label="u_z_rms**2")

ax.set_xlabel("z")

fig.legend()

fig.savefig("mean_rms.png")

#plt.show()

plt.close()

display(Image(filename="mean_rms.png"))